Champingatthequbit

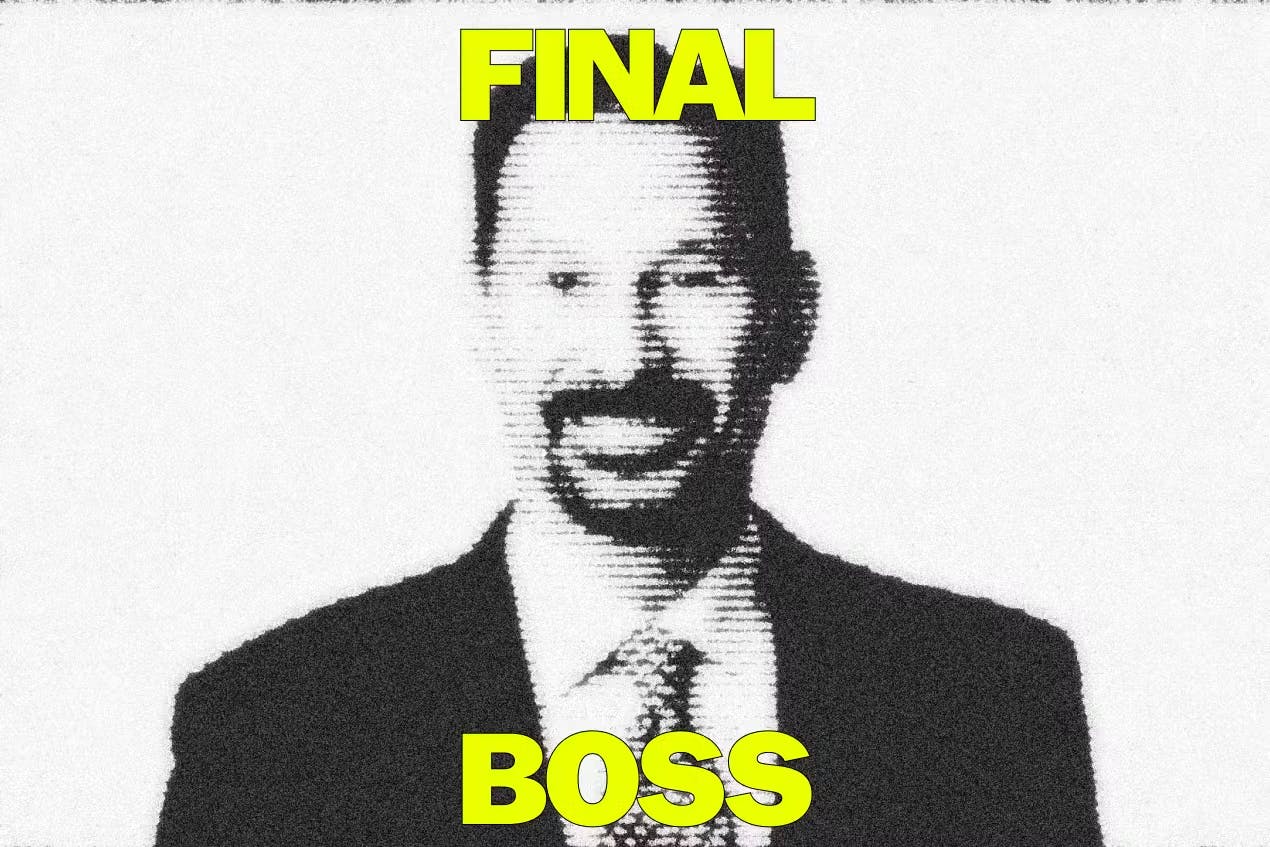

D-Wave Quantum’s CEO on what Jensen Huang gets “dead wrong,” the march to 1 million qubits, and “technological hocus-pocus”

Alan Baratz discusses the current business use cases for quantum computing, why his stock has soared, and how he’s really looking forward to his next earnings call.

American scientist Richard Feynman once quipped, “I think I can safely say that nobody understands quantum mechanics.”

Well, even if traders can’t comprehend the physics underpinning today’s hottest investment theme, they’ve still booked massive gains.

In the final two months of last year, relatively small quantum computing upstarts saw shares surge as much as 2,000%, punctuated by a massive spike after Alphabet announced a major computational breakthrough in December.

But enthusiasm for the cohort was zapped early in 2025 when Nvidia CEO Jensen Huang said it would take 15 to 30 years before quantum computers would be “very useful.”

D-Wave Quantum, which went public in 2022 via a SPAC, is one such firm that’s been whipsawed by the volatile shifts in sentiment. Shares vaulted more than 900% higher in 2024, only to lose more than a third of their value on Wednesday.

On Friday, the company provided updated guidance, saying its full-year 2024 bookings would be more than $23 million — that is, about 120% higher than the prior year.

Alan Baratz, CEO of the Palo Alto company, sat down with Sherwood News ahead of that announcement to discuss what makes the company different from its peers, how investors can distinguish between “technological hocus-pocus” and genuine progress in the industry, and what Nvidia’s CEO gets right and wrong about quantum computing.

This interview has been edited for clarity and length.

Sherwood: What is the distinction between quantum computing and “regular” computing, in terms of the problems quantum computers can solve?

Baratz: Quantum computing is simply using quantum mechanical effects — things like superposition, entanglement, tunneling — to solve hard computational problems faster than they can be solved with classical computers.

With classical computers you have bits, which can be 0 or 1. At any point in time, a classical computer is looking at and evaluating one possible solution to a problem as it searches to provide the optimal solution.

Quantum computing has qubits, which can be in superposition: they can be 0 and 1 and combinations of 0 and 1 at the same point in time, which means that multiple solutions can be evaluated at the same time, making the search for the optimal solution much faster.

Sherwood: Looking at development of D-Wave’s business, one of your earliest customers was Lockheed Martin back in 2010. How has the company evolved in terms of what you were able to do then and what you’re able to do now?

Baratz: We’re now on our fifth-, soon to be sixth-generation quantum computer. Over the course of the past 10 to 15 years, we have gone from 500 qubits to where we are today, which is over 5,000 qubits, so an order of magnitude increase. But it’s also about connectivity. Back then, each qubit was connected to six others. Today, that’s 15 others. It’s also about coherence time — the time that the qubit can live in the quantum state — and we’ve dramatically increased coherence time, which means we can solve problems much faster today than we could back then.

So basically the size of the system has scaled dramatically, allowing us to solve larger and more complex problems. The coherence time has increased dramatically, allowing us to compute solutions much faster. And finally, the precision with which we can specify the problem parameters has increased, which allows us to find better solutions.

That has allowed us to become commercial. We are now at the point where there are a number of important business applications that have been developed on our current-generation quantum system, some of those actually being used today in production by our customers to improve their business operations.

Sherwood: What do you enable businesses to do more? Better? Faster?

Baratz: Broadly speaking, the application areas where we do really well today are business optimization problems. There are many, many business optimization problems. In fact, most of if not all of the important, hard problems businesses need to solve fall into this category. Things like workforce scheduling, cargo container loading, or airline scheduling. Even protein folding. These are all optimization problems. What I’m trying to do is optimize some function against a number of constraints. These problems tend to be computationally very hard, what we call exponentially hard problems. The time to solve them grows exponentially with the size of the problem. In many cases, when you get to even a few hundred or a few thousand variables, we’re out of the reach of classic computers to solve optimally.

What do businesses do today? Well, they try to simplify the problem. Or they try to use heuristics to come up with what they hope are good enough solutions. Well, we come in with our quantum systems and we are able to solve the problems either at full scale, or faster, or with better solutions than they are getting today. And that’s what delivers a benefit for the business.

Sherwood: What are the current limitations on the ability of quantum computing to solve problems? How and when will the industry be able to overcome these?

Baratz: It depends on the class or the type of problem we’re talking about and the type of quantum computer we’re talking about. So let’s talk about our approach, and then I’ll talk a bit about the approach that literally everyone else is pursuing.

Sherwood: Is this annealing versus gate-based that we’re getting into here?

Baratz: Exactly. The two primary approaches are annealing and gate. D-Wave decided to start with annealing, and we decided to start there because it’s a much easier technology to work with. It’s easier to scale, so we’re at 5,000 qubits today while everyone else is at a few hundred, and it’s much less sensitive to noise and errors, which means we can deliver good if not optimal solutions to hard problems today without the need for error correction.

That has allowed us to become commercial today. We are the first and only quantum computing company that is commercial today, delivering a real business benefit today.

So when Jensen says it will be 15 to 30 years before you will see a useful quantum computer? Well, let’s just say he doesn’t fully understand the quantum industry, and he certainly doesn’t understand D-Wave, because we are commercial and delivering useful results today.

What’s the future for us? We will continue to invest in building larger quantum computers, from 5,000 qubits today, to 20,000, 50,000, 100,000, 1 million. And we think that it will be relatively straightforward for us to get there.

You may think that’s a questionable statement. But the thing to keep in mind is that we have increased the size of our quantum computers by simply putting more qubits on a chip generation after generation for five generations now. What we have not done yet is develop multi-chip solutions, where we have multiple chips interconnected.

With our technological approach to quantum, it’s actually relatively easy to interconnect multiple chips, which is where we are headed. If we’ve got a 5,000-qubit chip, and we interconnect four of them, which is pretty straightforward, we’re at 20,000 qubits in relatively short order.

We’re going to continue to scale the size of the systems, increase the coherence time, and support greater precision so that we can solve larger and more complex problems.

For example, if I want to solve a last-mile routing problem for a grocery chain, I may need tens of thousands of variables. I can do that today. In fact, we have that in production today with Pattison Food Group. But if I want to solve that problem for FedEx or UPS, it could be 50 million variables. So we need to continue growing the size of the system.

Sherwood: Now let’s talk about the gate-based model.

Baratz: It’s at a very different point in time. It’s a much more challenging technology to work with. We know it well because we’re also building a gate-model system, so we understand those challenges.

The challenge with gate, in addition to being much more difficult to scale, is that it is very, very sensitive to errors. Most academics believe you will not be able to do anything useful with the gate-model system without full error correction. So think about that. We need full error correction and then we need to scale these systems to hundreds of thousands if not millions of qubits to do anything useful.

Let me give you an example. Think about the application that gets most people really excited about gate-model system: breaking RSA, factoring semi-primes, Shor’s algorithm, and so on. If you look at work done at Google for what it would take to factor a 2000-bit semi-prime number, they estimate it would take on the order of 20 million qubits. Twenty million. They’re at like 80 today. If you look at trapped ion systems, like IonQ, their research indicates it could take 1 billion qubits. They’re at 25, 30 today?

They need full error correction and then to scale. Error correction in and of itself is very challenging. We still haven’t fully nailed the research behind that.

Then let’s talk about scaling. How are you going to scale a trapped ion system? Each ion trap can hold maybe 150 ions, but then you need to error correct them. Best case, maybe it takes 10 to 1 to do error correction, so maybe you get 15 error-corrected qubits in a trap. Now you’ve got to connect the traps and preserve coherence and entanglement across the traps, and that’s very challenging.

So when Jensen says 15 to 30 years, if he’s talking about gate-model systems, I don’t disagree. But when you’re talking about quantum computing in general, including annealing, he’s just totally off-base, because we’re there today.

Sherwood: It sounded like his description of quantum computing was very light switch, on or off, whereas what you’re describing with annealing is much more iterative and incremental?

Baratz: There is a step-function for gate that we need to achieve before we can even start considering incremental. And that is error correction and interconnectivity, and they’re both very hard problems.

Sherwood: You said yourself it would sound controversial to suggest a straightforward path from 5,000 to 1 million qubits. Is the more difficult constraint for you financial, or technological?

Baratz: If you had asked me that question a year ago, or six months ago, I might have said financial, because we had some challenges in raising capital. For the end of last year, we had $160 million plus in the bank, and given everything that we’ve invested so far, we’re not constrained by that today. But it’s also not so much technological, because it’s pretty straightforward engineering for us.

So when you ask me, “What is our biggest challenge?,” it’s actually embodied in what Jensen said and the way he said it. What he said was, “Quantum computing is 15 to 30 years away from being useful.” He did not say gate model is 15 to 30 years, but annealing is here today. He said quantum computing. So we get papered over with that comment. And it’s just dead wrong and incorrect when you’re talking about annealing. So our biggest challenge is helping the market understand that we are commercial today, we are open for business, and we can support them in solving their hard problems. That’s really what we’re focused on.

Sherwood: One thing that jumped out when you were talking about scalability and constraints was the difference between solving Pattison’s problems versus UPS or FedEx because of the complexity involved. Does this mean you have a built-in limitation for your customer base in light of current computing power, where you can only address smaller issues for firms that might be operating on more of a shoestring budget and be less willing to use a relatively new technology?

Baratz: I don’t think so. I talked about Pattison, but NTT DOCOMO — who I don’t think is operating on a shoestring budget. They’re the largest cellular provider in Japan — has us in production today to optimize cell tower operations. We have an application close to production with Ford Otosan around how to optimize the building of automotive bodies. We have worked with Mastercard on how to improve customer loyalty reward programs. These are not small companies operating on a shoestring budget. To reiterate my point, most of the important, hard problems that business need to solve across the board are optimization problems — exactly the class of problems we can solve.

It’s recently been shown mathematically that gate-model systems likely will never deliver speed-up on optimization problems. So even when fully error corrected and scaled, you’re not going to use them to solve the class of problems we’re solving today. That’s research that’s been done in the US and Europe, including by researchers at Google.

Sherwood: In terms of your competitors, whether that’s other midsize pure-play companies or Alphabet, as you just referenced, why aren’t more focused on annealing, an approach that seems to have a much smoother path to commercialization? What is the fascination or obsession with gate from these players?

Baratz: You’re right. It’s a fascination and obsession rather than a business decision. Here’s the thing to keep in mind: when D-Wave started working on quantum 15 years ago, another reason why we went with annealing was because way back then, it wasn’t even known whether you could build a gate-model system or not. Not only was it an easier technology to work with, there was also line of sight on how to do it.

When everyone else jumped into quantum seven, eight, or nine years ago, the science and the engineering had progressed to the point where it was believed you probably could build a gate-model system. And at that time, it was believed gate-model systems could solve all problems, including optimization. So if you’re Google, IBM, or Amazon and you want to build quantum and you’ve got deep pockets, you’re going to build the more general purpose quantum computer. So they all started down the gate path, recognizing it was going to be challenging.

Two things happened. One, they learned that building these systems is a lot harder than even they thought. Take IonQ: they said they’d be commercial in two to three years… that was two to three years ago. Today, they say, “We’ll be commercial in two to three years.” Because it’s really hard. Two, they got surprised by the fact that gate is not going to help with optimization. So it’s not strictly the most general quantum system. Big surprise. The whole industry was surprised by that.

Sherwood: It seems like since Alphabet’s Willow announcement late last year, we’re getting a press release a day from a quantum computing firms touting a “breakthrough” in the space. From your point of view, what constitutes progress? When a layperson is reading these, what should they be on the lookout for?

Baratz: Ultimately, it’s about application benchmarking. Until you see a quantum company talking about applications and how much better or faster they’re solving problems than can be solved classically, you don’t really have much. You’ve got technological hocus-pocus.

What you really want is application results, and we put out as many press releases around application results as we do around technology and innovation. But we’re the only ones who do it because we’re the only ones that are at the point where we can do it. It’s not enough to say, “We’re working with so-and-so on this application.” Great, that’s research. Let’s talk about the actual results you’ve gotten versus classical on that application and why it’s better.

That’s what we try to do always. For instance, with NTT DOCOMO, we talk about how they can support up to 15% more cellphones per cell tower, leveraging our quantum computers. Those are the kind of things you want to hear about.

Besides that, if you really want to just focus on the technology advancement, which is really all you can do right now in the gate space because they’re so far away from applications and being able to demonstrate real value on applications, the Google Willow announcement was a good one. That was the first time that there was demonstrable progress on error correction.

We need — forgive me — quantum leaps in error correction and scale. When IonQ says, “We’ve gone from 28 algorithmic qubits to 33 algorithmic qubits” — OK, fine. You need a billion, roughly. That’s what your own researchers are saying! So you want to start seeing announcements that are showing significant advances in error correction and scale. Those are the things you want to be looking for.

Sherwood: I’d be remiss if I didn’t talk about how investors have been viewing your stock and the industry more broadly. To what do you attribute the surge in your stock and your peers?

Baratz: Quantum is a nascent industry, and in that kind of environment, people are looking for proof points. When they see a significant positive proof point, that generates optimism. The Willow announcement was a really good announcement. That was good research and scientific accomplishment in one of the two really important areas for quantum. That’s a good step forward.

I think, though, that we need to continue making progress and the market needs to be a bit more savvy about the fact that not all quantum is created equal. You need to look at annealing different from gate, and on the annealing side you need to recognize it’s commercial today and we’re building a business around it today. That’s a sustainable model.

Sherwood: So, looking at your stock price now versus six months ago, do you think the market is pricing the present value of your future discounted cash flows more accurately or less accurately?

Baratz: I won’t say any more than we’re really looking forward to our next earnings call.

Sherwood: Whenever I see a stock or an industry take off like this, I’m thinking, “Either investors are looking for something big soon, or they’re warming to the idea of something really big happening eventually.”

Baratz: The recent increase has been powered by technological excitement, and that’s important. We need to keep focused on that. But we also need to start looking at business progress. The three legs of the stool: technological progress, business progress, and liquidity. I feel actually quite good right now about D-Wave on all three legs.

Interview updated to show that D-Wave is based in Palo Alto. Bloomberg data lists the company as based in Burnaby, British Columbia.