Hoppers, Blackwells, and Rubins: A field guide to the complicated world of Nvidia’s AI hardware

It’s common knowledge that Nvidia is at the core of the AI boom, but understanding what makes a “superchip” or why a NVL72 rack costs millions takes a bit of work.

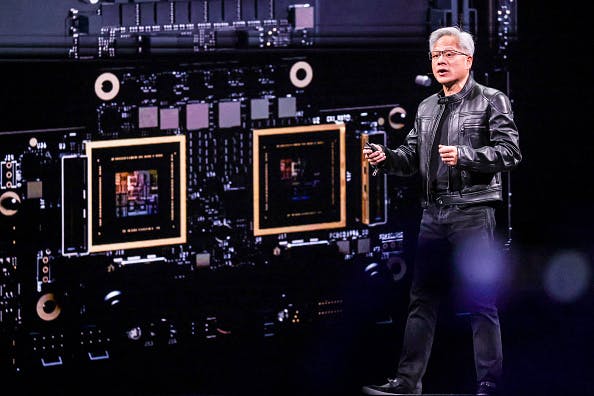

No company has played a more central role to the current AI boom than Nvidia. It designed the chips, networking gear, and software that helped train today’s large language models and scale generative-AI products like ChatGPT to billions of users.

Understanding Nvidia’s AI hardware offerings, even for the tech savvy, can be challenging. While many of the biggest tech companies are hard at work building their own custom silicon to give them an edge in the ultracompetitive AI market, you will find Nvidia’s AI hardware powering pretty much every big AI data center out there today.

Some estimates have Nvidia owning as much as 98% of the data center GPU market. This has fueled the company’s meteoric rise to become one of the world’s largest companies.

A chip by any other name...

To start understanding the landscape of Nvidia’s chips, it’s helpful to understand what each generation is called and which semis came out in that time. Going all the way back to 1999, Nvidia has named its various chip architectures after famous figures from science and mathematics.

Earlier generations of Nvidia’s chip architecture powered the rise of advanced video graphics cards (in case you didn’t know, GPU stands for graphics processing unit) that helped propel the video game industry to new heights, but GPUs’ ability to run massively parallel vector math turned out to make them perfectly suited for AI.

The hot H100

The breakout star of Nvidia’s hardware offerings was undoubtedly the most powerful Hopper series chip, the H100 Tensor Core GPU. Announced in April 2022, this GPU was a breakthrough that featured the new “Transformer Engine,” a dedicated accelerator for the kinds of processing that large language models relied on for both training and “inference” (running a model) — which saw a 30x improvement from the previous generation’s fastest chip, the A100.

After OpenAI’s ChatGPT exploded onto the scene, demand for the H100 led tech companies to stockpile hoards of hundreds of thousands of the GPUs to help build bigger and faster large language models.

The H100s are estimated to cost between $20,000 and $40,000 each.

Blackwell “superchip”

In the fast-moving AI industry, while the H100 is still a hot item, the latest chip everyone is turning to is the GB200 — what Nvidia calls the “Grace Blackwell superchip.” This chip combines two Blackwell series B200 GPUs and a “Grace” CPU in one package.

But if you’re in the market for such powerful AI hardware, it’s likely you want dozens, hundreds, or even thousands of these chips wired up with the fastest interconnections you can get. That’s where the “GB200 NVL72” comes in. The NVL72 comes packed with 36 of the GB200 superchips — so 36 Grace CPUs and 72 of the B200 GPUs. Confused yet?

And if you’re going on a GPU shopping spree, you better have lined up some VCs with deep pockets. Each GB200 superchip is estimated to cost between $60,000 and $70,000, while a fully equipped NVL72 rack is estimated to cost roughly $3 million, as it requires not only the pricey superchips but also expensive networking and liquid cooling.

If that’s too rich for you, you can always turn to AI investor darling CoreWeave, which advertises access to its batch of GB200 NVL72s starting at $42 per hour. CoreWeave says it has over 250,000 Nvidia GPUs in its data centers.

According to Bloomberg, the “Stargate” mega data center project backed by OpenAI, SoftBank, and Oracle is planning on installing 400,000 of the GB200 superchips.

And Meta CEO Mark Zuckerberg has stated that he expects the company to have over 1.3 million GPUs by the end of 2025.

Leaps in performance

When you’re talking about leaps forward in AI, it’s important to remember than rather than slow incremental bumps, each generation of chips is making exponential gains in a metric known as FLOPS, which measures performance.

Rubin matters

All this Nvidia jargon aside, there’s one model name you should pay attention to: Rubin, which will be the next leap forward in compute power.

Next year we’ll see the first of the Rubin architecture chips, the “Vera Rubin” superchip named after the American astronomer known for discovering dark matter.

Following the Vera Rubin chip release will be the Vera Rubin NVL144 (144 GPUs) and then Vera Rubin Ultra NVL576 (576 GPUs) in the second half of 2027.

Phew. Got all that?