OpenAI releases GPT 5.1, which can be “Professional,” “Candid,” or “Quirky”

The new “more conversational” model follows instructions better, but backslides on some safety tests.

Today OpenAI released GPT 5.1, an update that aims to make ChatGPT “more conversational.” The model comes in two versions: GPT-5.1 Instant (“now warmer, more intelligent, and better at following your instructions”) and GPT-5.1 Thinking (“now easier to understand and faster on simple tasks, more persistent on complex ones”).

Despite an earlier update this year that was rolled back due to being overly sycophantic, the new model responds in more chummy conversation that the company says “surprises people with its playfulness” in testing.

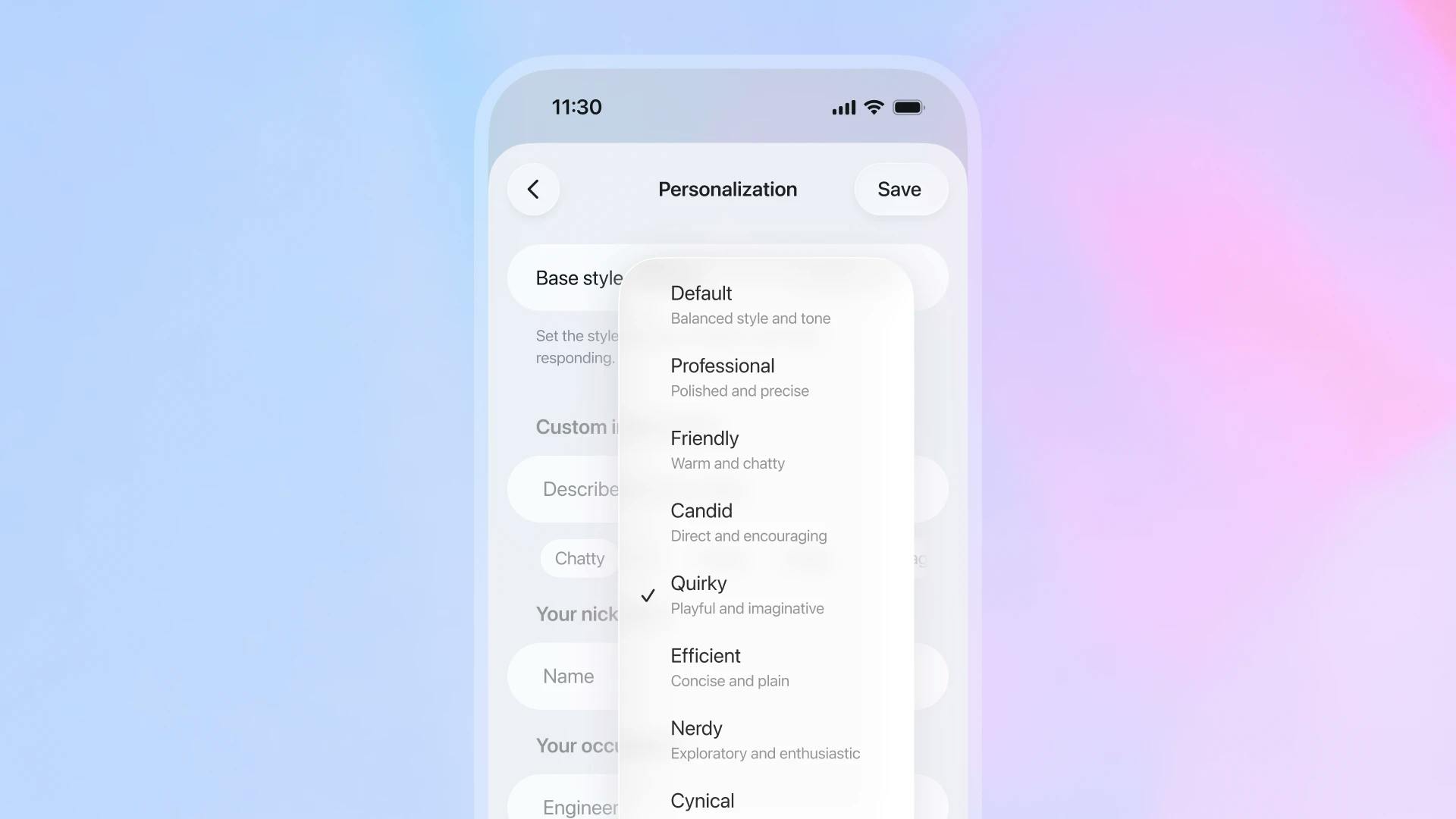

Users now have finer control over ChatGPT’s “personality,” with new settings for “Professional,” “Candid,” and “Quirky.”

In the model’s system card, OpenAI details how well the new 5.1 models compare to the earlier 5.0 models on internal benchmarks for disallowed content.

The company has said it is prioritizing the addition of new checks to help users who may be suffering a mental health crisis, after a series of alarming incidents where ChatGPT encouraged self-harm and reinforced delusional behavior.

Two new tests were included with this release for the first time: “mental health” and “emotional reliance.” GPT 5.1 Thinking actually scored slightly lower on 9 of 13 testing categories than its predecessor, GPT-5 Thinking, and GPT-5.1 Instant scored lower than GPT-5 Instant on 5 of 13 tests.

More thinking, more tokens

OpenAI says that GPT-5.1 Thinking now spends less time on simple tasks and more time on difficult problems. This is measured by the number of model-generated tokens (tiny bits of text). Based on a chart in the announcement, the very toughest queries handled by GPT-5.1 Thinking will use 71% more tokens to complete the query. That’s a lot more tokens, and a lot more computing!

All those tokens can add up. Every time OpenAI’s customer-facing models gobble up more computing cycles, it spends more on “inference,” or running the models (as opposed to the more resource-intensive training process that happens while building the models). When enterprise customers use OpenAI’s API to use the models, the customer pays by the token count, but free users using the chat interface do not.

As a private company, OpenAI’s finances aren’t public, but a new report from the Financial Times raises the question of how much all these “thinking” models are costing the company. While The Information recently reported that OpenAI spent $2.5 billion in the first half of 2025, AI skeptic, podcaster, and writer Ed Zitron told the FT he has seen internal OpenAI figures showing that OpenAI’s cash burn for the first half of the year was much higher — close to $5 billion.

To satisfy the $1 trillion in recent deals it has signed on to, OpenAI will need to find a way to generate more revenue.